Usage¶

Since CoRD was originally designed for use in a cluster computing environment, the initial way we present here for using it is through a command-line interface. If a GUI would be helpful, please tell us by creating a new issue on GitHub.

Currently there are two things you can do using cord.

- Run a coupled RipCAS-DFLOW model

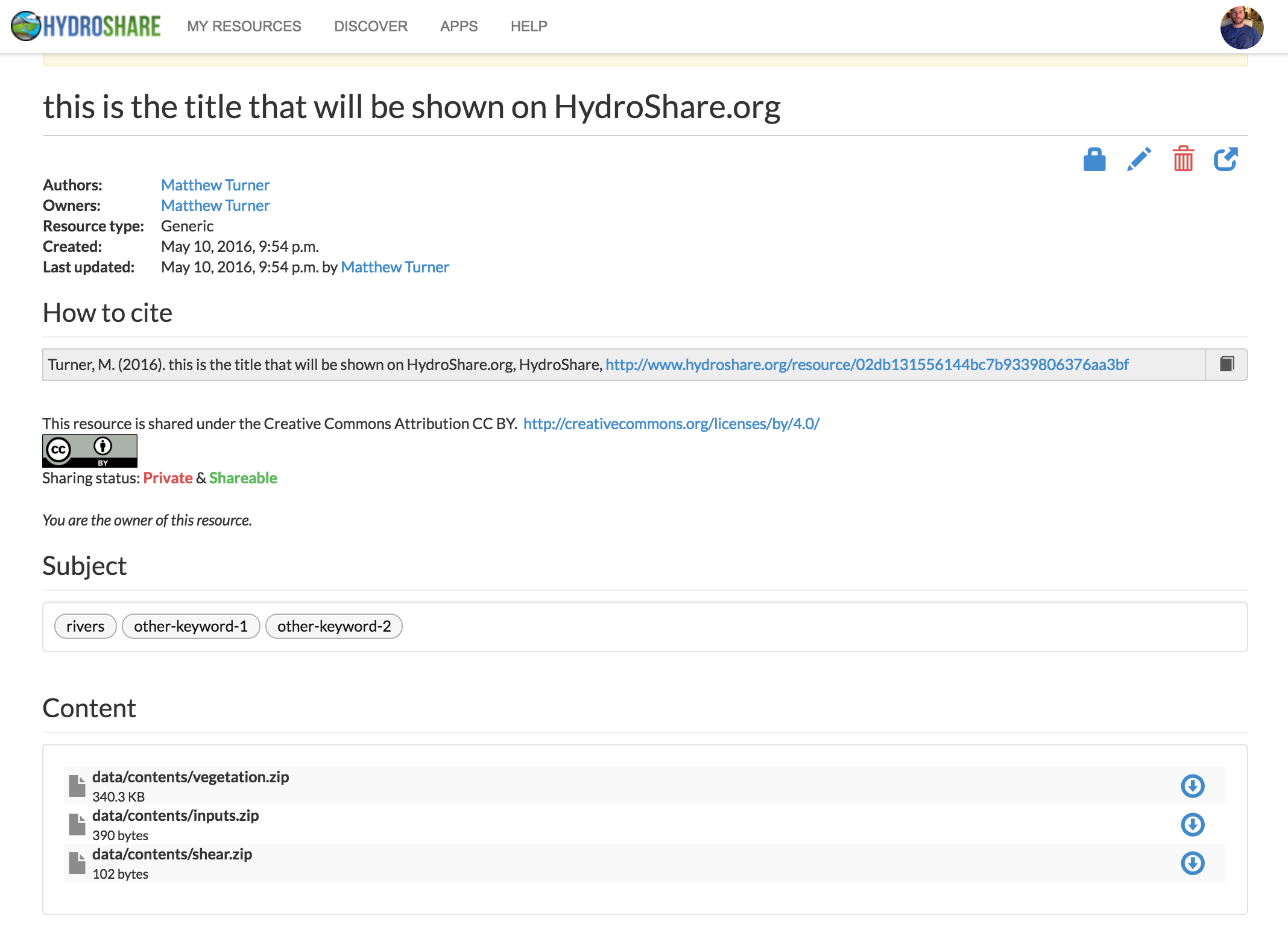

- Post the outputs of the RipCAS-DFLOW model to HydroShare

HydroShare is a nice web app for storing, sharing, and publishing data. It also provides a Python API that we use internally with CoRD. If you want to be able to push data to HydroShare you’ll have to sign up for a HydroShare account.

We offer two different options for running a coupled RipCAS-DFLOW model: the first is Run a coupled model with config file parameters and the second is Use the interactive command to run CoRD where the user can either enter required information as command-line options or CoRD will prompt them as necessary. The following guide introduces the three commands for the two methods of running RipCAS-DFLOW and posting data to HydroShare.

Quick Reference Guide¶

If you are using CoRD and want to see the built-in help you can run

cord

Which will print the help

Usage: cord [OPTIONS] COMMAND [ARGS]

Options:

--debug

--logfile TEXT

--help Show this message and exit.

Commands:

from_config Run CoRD with params from <config_file>

interactive Run CoRD interactively or with options

post_hs Post the model run data to HydroShare

Quick note on the –logfile option¶

The most important option to note here is the ability to set the logfile. If you

don’t set the logfile, it will automatically be set to the full

data_directory path with slashes replaced with dashes with extension

.log. This can be pretty ugly if you are working with many modelruns at

once. This option works with the from_config and interactive commands.

There is no log file generated

Help for individual commands¶

You can also get help messages for individual commands. For example, to get help

on the post-to-HydroShare command, post_hs you could type

cord post_hs --help

and get this help message

Usage: cord post_hs [OPTIONS]

Post the model run data to HydroShare

Options:

--username TEXT

--password TEXT

--modelrun-dir TEXT

--include-shear-nc BOOLEAN

--resource-title TEXT

-k, --keyword TEXT

--help Show this message and exit.

Run a coupled model with config file parameters¶

DFLOW has users provide a configuration file for providing the locations of

input files, specify parameters, and so on. In that spirit, we allow users to

specify these sorts of things in a configuration file. Below is the

configuration template file, which

can also be downloaded here.

# Default configuration file; customize as necessary.

#

# To enable syncing with HydroShare, set SYNC_HYDROSHARE to "True" and

# add your HydroShare login info.

[General]

DATA_DIR = debug_data_dir # must create the directory before running

INITIAL_VEGETATION_MAP = data/ripcas_inputs/vegclass_2z.asc

VEGZONE_MAP = data/ripcas_inputs/zonemap_2z.asc

RIPCAS_REQUIRED_DATA = data/ripcas_inputs/ripcas-data-requirements.xlsx

PEAK_FLOWS_FILE = data/peak.txt

GEOMETRY_FILE = data/dflow_inputs/DBC_geometry.xyz

STREAMBED_ROUGHNESS = 0.04

STREAMBED_SLOPE = 0.001

#

# python import style, e.g. my_dflow_module.my_dflow_fun

# defaults to a function that calls qsub dflow_mpi.pbs

DFLOW_RUN_FUN =

# will default to the data_dir with dashes replacing slashes if blank

LOG_F =

This lists all the files and parameters that are required for running CoRD.

We explain all of these below, but first we will show how to run CoRD with a

config file and then in the next section we show the equivalent command using

the interactive command.

Once we have our configuration file ready and saved to

/path/to/myconfig.conf, for example, run CoRD by running

cord from_config /path/to/myconfig.conf

Use the interactive command to run CoRD¶

This command is called “interactive” because if not all files and parameters are

specified as options, CoRD will prompt the user for the values. Here is an

example of the same command run using the from_config command above, but

with all options specified. Note that the directory passed to --data-dir

must exist.

cord interactive \

--data-dir=debug_data_dir \

--initial-veg_map=data/ripcas_inputs/vegclass_2z.asc \

--vegzone-map=data/ripcas_inputs/zonemap_2z.asc \

--ripcas-required-data=data/ripcas_inputs/ripcas-data-requirements.xlsx \

--peak-flows-file=data/peak.txt \

--geometry-file=data/dflow_inputs/DBC_geometry.xyz \

--streambed-roughness=0.04 \

--streambed-slope=0.001